Backpropagation is a method of training an Artificial Neural Network. If you are reading this post, you already have an idea of what an ANN is. However, lets take a look at the fundamental component of an ANN- the artificial neuron.

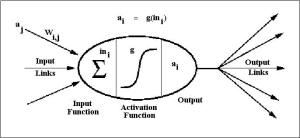

The figure shows the working of the ith neuron (lets call it $latex N_i$) in an ANN. Its output (also called its activation) is $latex a_i$. The jth neuron $latex N_j$ provides one of the inputs to $latex N_i$.

How does $latex N_i$ produce its own activation?

1. $latex N_i$ has stored in itself, a weightage assigned to each of its inputs. Lets say that the weightage assigned by $latex N_i$ to $latex N_j$ is $latex w_{i, j}$. As the first step, $latex N_i$ computes a weighted sum of all its inputs. Lets call it $latex in_i$. Therefore,

$latex in_i = sum_{k in Inputs(N_i)}…

View original post 2,267 剩余字数